Describing what digital biology entails has never been an easy task. At times it has been considered a twin to synthetic biology and at others a subset of biodata fields. Its true nature is so broad that even considering it to be a single field may end up proving fallacious in the future and it will be split up into separate categories.

For now, what we know is that digital biology and the process of bio-digitization are, as their names would suggest, fundamentally about data. The collection of it, the storage of it, the modeling of it, and the transfer of it into biological form outside of just ones and zeroes. In the broadest sense, any field utilizing computer modeling and documentation of biological data could fall under this banner, though many would still consider themselves distinct.

In light of this being an article that by necessity must be more limited than that, we will be focusing on some of the more important accomplishments in digital biology and the emerging science used for the storage of data within DNA itself. So, as usual with these sorts of topics, let’s start with a bit of a history lesson.

Digital Biology: A History

The Early Steps

Setting up a marker for the true beginning of digital biology isn’t a feasible task due to what it encompasses. For our purposes, we’ll be starting in 2003. That’s the year that a national symposium was conducted at the National Institutes of Health (NIH) in Maryland. The topic of this meetup? “Digital Biology: the Emerging Paradigm” was what they decided to go with.

Scientists in biomedical fields especially had noticed that the compilation of biological and medical data was rapidly transforming the industry. It was making their jobs easier by managing databases of scientific information and letting them model chemical and molecular combinations for use in medicine and treatments.

Of course, with things moving along so quickly, issues would soon crop up, such as people using their own individual data systems in different hospitals and different states and there not being an integrated method to share scientific discoveries with other researchers in the US or beyond. This was one of the main purposes of the symposium and something they sought to solve. Networking, the bane of some scientists, was what was needed to properly advance to the next era.

While this conference didn’t solve these problems outright, it did condense them into specific questions that would need to be addressed in the future. The biomedical community would work to fix these over the next decade and combine with other disciplines to set up complex and all-encompassing databases available online to search for genetic sequences, chemical structures, biomolecular markers, and several other things.

A Major Creation

Now we must skip forward past most of that same decade, until we land squarely in 2010. Here, we see the real emerging dawn of the field of digital biology, coincidentally coinciding with a major breakthrough in synthetic biology as well. This was the creation of life, the makings of Synthea.

That year, a group of 24 scientists, led by the renowned J. Craig Venter, Nobel Laureate Hamilton Smith, and the distinguished Clyde A. Hutchison III, succeeded at making a new biological species. Starting with the genome of Mycoplasma mycoides (actually, they started with Mycoplasma genitalium because it had the smallest number of genes known at the time, but it also grows a fair bit slower, so they abandoned it for the faster replicating aforementioned species), they put all of their computer modeling technology to bear.

It took all of the collected scientific knowledge of the Mycoplasma genus and 7 years worth of experimentation to succeed with their plan to make new life from scratch. Each gene in the bacteria had to be noted and the full genome designed using computer modeling software and then physically constructed nucleotide by nucleotide. That digitized template served to help make the actual modified bacteria that day in 2010, which was later named Mycoplasma laboratorium or Synthea as a nickname.

Such an experiment proved that computers were capable of modeling not just genomes, but also the resulting cellular architecture and the cell as a whole. While this particular test didn’t go quite that far with all the components, it opened the door to such capabilities. Venter is notably recorded as saying that Synthea was the first organism created to have its “parents be a computer”.

As a side note, later experiments by the Venter organization would lead to the Minimal Genome Project that looked to create a bacterial cell with the smallest number of genes possible for survival. This task would find its completion in early 2016. The answer turns out to be 473 genes for that species in question, about half of the original wild-type’s total.

Other Projects

The next big accomplishment in digital biology would stand as an achievement for the field alone, not requiring any other involvement from interdisciplinary scientists. In 2012, only two years after the prior big leap, Karr et al. released the most complex model to date. Going back to that abandoned and simplest bacterial species, Mycoplasma genitalium, the researchers were able to construct a “whole-cell computational model” that followed the entire lifecycle of the bacterium and all of its molecular interactions.

Rather than trying to create such a form in reality, it was kept entirely digital to test the new limits of what could be modeled and determined phenotypically from an in-depth understanding of an organism’s genotype. The data from more than 900 previous studies were included, along with 1900 biological parameters. This extensive modeling process resulted in the unearthing of novel bacterial behaviors and mechanisms, such as the frequency of protein interactions with DNA and how DNA replication rates plunge significantly once the free amounts of deoxynucleotides (dNTP) are used up and must instead wait on dNTP synthesis.

Previous models of this type were limited to just following a few metabolic process in a cell over its lifetime and lacked the computational complexity vital to building the bigger picture involving all the molecular parts. Karr et al. were able to avoid these roadblocks by setting up separate “modules” in the computer program that each followed a single full process, such as one module focusing on transcriptomics and thus all the RNA activity in the cell, another looking at proteomics and all the protein activity, ect. These ended up totaling 28 modules for the model to compute.

Using them as independent variables meant that they could be mathematically run with the best possible algorithm that matched the processes involved. And then a split-off algorithm would run every second of modeled time to calculate the interactions between the different module parts themselves, like the aforesaid DNA-protein interactions.

With this complete model, as an example of the power it could exhibit, the study authors also ran simulations testing for what happens if each of the bacteria’s 525 genes were knocked out of their function. In short, an individual set of data for all 525 possible single gene knockout strains. Of course, many of those ended up just killing the bacteria, though all digitally obviously. But it allowed them to test and confirm the essential function of genes and how they interact with each other.

But even with the broad capability that whole-cell modeling brings to the table, there are still several hurdles to overcome and some which have likely not revealed themselves yet. For Karr et al.’s study itself, one issue was that the difficulty in culturing M. genitalium resulted in the authors using matching gene data from other bacterial studies for species that had some identical genes. Whether this results in an accurate model for the genes in this particular species would still be an open question after this publication. Though that, at least, is an easily fixable problem. Just pick a more easily cultivated bacteria to use for whole-cell modeling, like E. coli.

The other major hurdle is what stops researchers from being able to solve the former one though. Computing power is, as it has been for many fields, the central stumbling block to accomplishing already conceived goals. E. coli, for example, has nearly 9 times the number of genes and a further complicated number of intersectional biological mechanisms that Karr et al. did not try to address with M. genitalium, like enzyme multifunctionality.

Perhaps the advent of quantum computers will quickly scale us past this being a problem. We’ll have to see.

Digital Microtechnology

This section will act as a quick aside to look at the effect that the expansion of digital biology has had on other related fields of study. The first place that should come to mind for those of you involved in more mechanically minded biological fields is the entire concept of making a “lab-on-a-chip”.

While the term can apply to many advancements in the field of transistor creation and ongoing miniaturization of our modern technology, we’ll be talking about it in the context of moving biological devices to a physical hardware form that can, in turn, digitally process such information. This has proven to be hugely influential in chemistry and the multidisciplinary field of microfluidics.

For scientists in these studies, the use of digital biology modeling has been necessary for figuring out two specific things, how to follow the output of certain biological signals that change depending on the input concentration and how to follow molecular and multi-cell signaling by looking at individual components. The way to achieve these is to use lab chips that also double as analog physical data to digital converters, where they can record biological activity and morph it into digital data.

One main example is how this could be used to make digital forms of diagnostic tools like PCR machines and ELISA tests. This could allow for construction of miniature physical detection devices on a chip and then have highly sensitive recordings that can be digitally modeled in real time.

Such technologies are far from limited to just nucleic acids and proteins, however. They can be used on a more macro scale to simulate biological mechanisms in organs and interactions between different type of bodily cells to understand the molecular control switches that are involved in, for example, blood clotting. The field of immunology will likely benefit hugely from being able to use lab-on-a-chip devices to activate immune system cascades and digitally model each step in the specific systems that can be analyzed and simulated in other ways afterwards.

This shows that digital biology as a whole, when combined with advanced micro and nanotechnology, will be able to compute the function of any level of biology entirely and even perhaps non-organism based systems like water flow and particle motion. With the continued industry growth of such tech being constructed over the past five years or so, there is still much room for specialization.

Bio-Digitization: DNA As A Digital Storage Library

Now that we’ve discussed a fair bit of background history and the general nature of digital biology, we can finally come to the main topic that prompted this article, the recent increase in capability to store data not digitally, but as physical code in DNA.

The actual idea and implementation of doing so is not all that contemporary. The first recorded instance of storing any sort of message in DNA was in 1988 and many experiments of that type have been done since. But, all in all, they had barely gone further than a few kilobites of data. By 2010, the longest bit of purposefully synthesized information into DNA was arguably the previously discussed Synthea project, which came in at just shy of 8 kilobites.

This limited increase is likely due to just how difficult it has been to create nucleotide perfect strings of DNA, without any errors. These studies were before the advent of CRISPR and the far higher accuracy that the gene editing revolution afforded to nucleotide alteration. Additionally, there was also just the fact that such things had no apparent use at the time, so it was more of a scientific hobby to dabble in, rather than a purposefully investigated topic.

Achieving New Densities

But that 2010 Synthea study really was the kicking off point for so many other experiments. It redefined the limits of what computers could do and what DNA could be used for. In 2012, Church et al. decided to take the entire DNA as storage idea to the next level by several orders of magnitude.

Using state of the art DNA synthesis machines and sequencers, they were able to convert an HTML document containing “a book that included 53,426 words, 11 JPG images and 1 JavaScript program” into DNA. A 5 megabit bitstream was established that ultimately encoded 54,898 sets of data that were each 159 oligonucleotides long. The length of this nucleotide sequence was needed to set up a data block, an address locator, and basic sequences that help with DNA amplification and sequencing.

The benefits of this system is that each bit was able to correspond to a direct one for one nucleotide arrangement (“A or C for zero, G or T for one”), making it more efficient to encode and later recover. Several copies of each bit were used for redundancy so that errors in sequence recovery, which are rare but do show up with about 10 errors per 5 million nucleotides, don’t affect the actual data reconstruction.

The density achieved with this new system averaged at about 700 terabytes of information per gram of DNA. This easily made it the most dense data construction in the world, having a greater density than modern computer systems that have reached 2-3 terabytes per square inch. Even if leeway is given for an increase to a cubic inch, one of those still counts as about 16 grams worth of material, showing that DNA storage far outstrips mechanical hardware.

And that was just the beginning. Following that, in 2013, Goldman et al. modified the storage methods to allow for up to 2.2 petabytes per gram of DNA. He altered the binary code system of Church et al. to a ternary code (0, 1, 2) that properly allowed the matchup between the triplet codon system of DNA and the encoded data. The fourth nucleotide was used to swap out for one of the previous three every triplet, to ensure no duplicate triplets were coded side by side homologously. This heavily reduced the possibility for DNA reading errors when sequencing the data.

The Limits and Beyond

By 2016, researchers at an international conference for programming languages suggested using a DNA-based archival system in reality as data storage. They explained that the theoretical limit for storage density in DNA sits at about 1 exabyte (1000 petabytes) per cubic millimeter of DNA, a size 1000 times smaller than the previously discussed grams of DNA.

Of course, it would not be an easy limit to reach. Similar to physical hardware, we can talk about the limits of density all we want, actually reaching the ability to encode at those densities isn’t so simple. And may not even be realistically possible regardless of theory. But it can act as a general guideline or goal to strive for.

And it is something that we likely should try to reach. The capacity requirements of the global “digital universe” continues to increase year by year, with an incredibly high 50% needed advancement of storage availability every consecutive year. Currently, in 2017, the world is expected to require over 16 zettabytes (1000 exabytes) of storage to meet demands. Which will only get worse over time.

Perhaps we will finally reach a limit where storage demands taper off and become stable, but even if global population stabilizes, it is unlikely to prevent the ongoing archiving of new information that is generated. Quantum computers may eventually allow for expansive memory and computing potential, but they will not alleviate the need for storage.

DNA may truly prove to be the only answer to such a conundrum.

It may also lead to sci-fi-esque futures where every individual’s personal health information is stored within their own genome. Going to the doctor and having blood drawn may not just include biomolecular information on one’s health, but also all the actual stored data documents from one’s past that would allow hospitals to quickly and accurately chart medical conditions and medication requirements.

Of course, there is also the possible scary side of things with such data being used to control groups of people and record negative information about them. But those issues are always a potential outcome of scientific advancements. We all must push for proper and beneficial usage of new technologies and not let the possibility of bad outcomes squander our capability to improve the health and living conditions of people around the world.

We must be vigilant and we must work together to have scientific discoveries be used in a way that helps all of humanity. Digital biology as a whole and improvements in bio-digitization may be one of the keys, in conjunction with synthetic biology and other fields, to propel us toward a post-scarcity and post-disease planet. I, personally, look forward to it.

References

1. Minie, M. E., & Samudrala, R. (2013). The Promise and Challenge of Digital Biology. Journal of Bioengineering & Biomedical Science, 3, 118. doi:10.4172/2155-9538.1000e118

2. Müller, M. (2016). Chapter: “First Species Whose Parent Is a Computer” — Synthetic Biology as Technoscience, Colonizing Futures, and the Problem of the Digital. Ambivalences of Creating Life (Vol. 45, ETHICSSCI). Cham, Switzerland: Springer International. doi:10.1007/978-3-319-21088-9_5

3. What Is Digital Biology? (2005, June). The Scientist, 19(11). Retrieved August 23, 2017, from http://www.the-scientist.com/?articles.view/articleNo/16478/title/What-Is-Digital-Biology-/

4. Sleator, R. D. (2012). Digital biology. Bioengineered, 3(6), 311-312. doi:10.4161/bioe.22367

5. Morris, R. W., Bean, C. A., Farber, G. K., Gallahan, D., Jakobsson, E., Liu, Y., . . . Skinner, K. (2005). Digital biology: an emerging and promising discipline. Trends in Biotechnology, 23(3), 113-117. doi:10.1016/j.tibtech.2005.01.005

6. Witters, D., Sun, B., Begolo, S., Rodriguez-Manzano, J., Robles, W., & Ismagilov, R. F. (2014). Digital biology and chemistry. Lab on a Chip, 14, 3225-3232. Retrieved August 23, 2017, from http://pubs.rsc.org/en/content/articlehtml/2014/lc/c4lc00248b

7. Karr, J. R., Sanghvi, J. C., Macklin, D. N., Gutschow, M. V., Jacobs, J. M., Bolival, B., . . . Covert, M. W. (2012). A Whole-Cell Computational Model Predicts Phenotype from Genotype. Cell, 150(2), 389-401. doi:10.1016/j.cell.2012.05.044

8. Freddolino, P. L., & Tavazoie, S. (2012). The Dawn of Virtual Cell Biology. Cell, 150(2), 248-250. doi:10.1016/j.cell.2012.07.001

9. De Silva, P. Y., & Ganegoda, G. U. (2016). New Trends of Digital Data Storage in DNA. BioMed Research International, 1-14. doi:10.1155/2016/8072463

10. Bornholt, J., Lopez, R., Carmean, D. M., Ceze, L., Seelig, G., & Strauss, K. (2016). A DNA-Based Archival Storage System. Proceedings of the Twenty-First International Conference on Architectural Support for Programming Languages and Operating Systems, 637-649. Retrieved August 23, 2017, from https://homes.cs.washington.edu/~luisceze/publications/dnastorage-asplos16.pdf

11. Venter, J. C., Gibson, D. G., Glass, J. I., Lartigue, C., Noskov, V. N., Chuang, R. Y., . . . Smith, H. O. (2010). Creation of a bacterial cell controlled by a chemically synthesized genome [Abstract]. Science, 329(5987), 52-56. doi:10.1126/science.1190719

12. Sleator, R. D., & O’Driscoll, A. (2013). Digitizing humanity. Artificial DNA: PNA & XNA, 4(2), 37-38. doi:10.4161/adna.25489

13. Church, G. M., Gao, Y., & Kosuri, S. (2012). Next-Generation Digital Information Storage in DNA. Science, 337(6102), 1628. doi:10.1126/science.1226355

14. Goldman, N., Bertone, P., Chen, S., Dessimoz, C., LeProust, E. M., Sipos, B., & Birney, E. (2013). Toward practical high-capacity low-maintenance storage of digital information in synthesised DNA. Nature, 494(7435), 77-80. doi:10.1038/nature11875

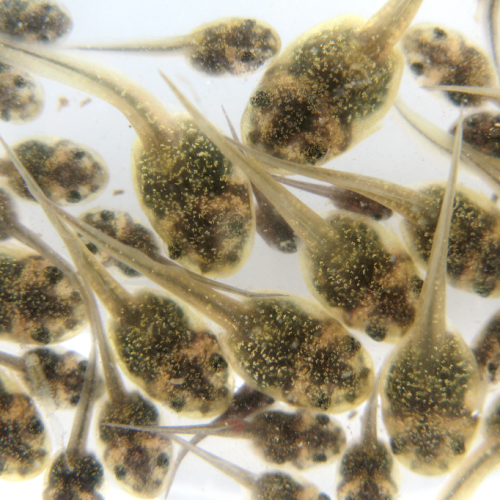

Photo CCs: BinaryData50 from Wikimedia Commons